Table of Contents

Besides the brief overview on ParaStation MPI given within the introduction this chapter will give a more detailed insight concerning the building blocks forming the ParaStation MPI system and the main architecture of ParaStation MPI.

As already mentioned ParaStation MPI consists of the modules

high performance communication subsystem and

the cluster management facility.

The communication subsystem of ParaStation MPI is composed of a couple of libraries and kernel modules. Applications that want to benefit from the ParaStation MPI communication system have to be build against these libraries, except when the TCP bypass is used. Furthermore the cluster nodes running this application have to have the kernel module(s) loaded and the shared versions of the communication libraries loaded, if used.

The management part of ParaStation MPI is implemented in a daemon process, running on each of the cluster nodes. All these daemons constantly gather and interchange information in order to get a unique global view of the cluster. Applications that want to profit from this view to the cluster have to talk to this daemons. This is usually done via an interface implemented in another library. Thus parallel applications have to be linked against this library, too.

Both parts of ParaStation MPI will be discussed in detail within the next sections of this chapter.

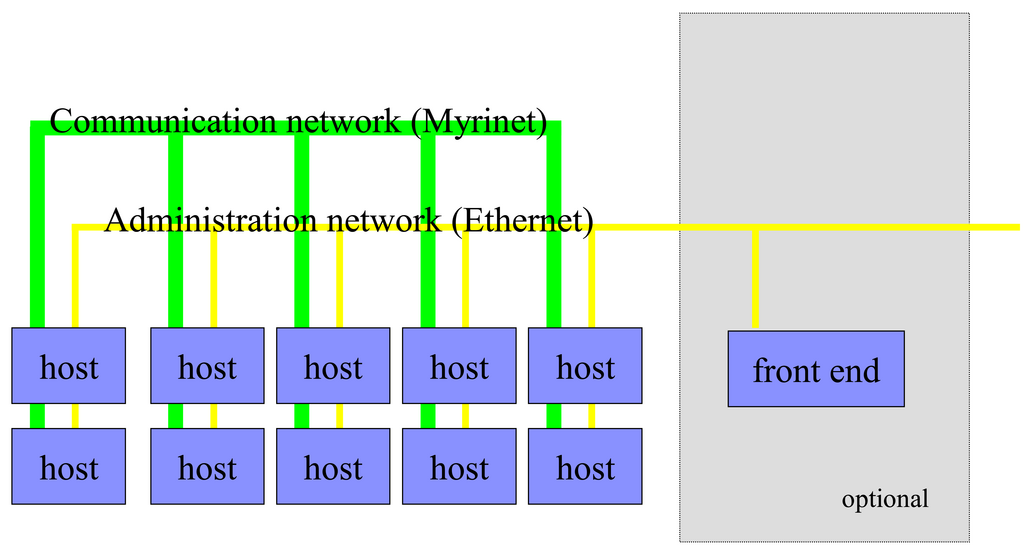

In order to preserve the high communication bandwidth of the fast network to the applications, a strict separation between the application related communication traffic and the network traffic caused by administration tasks is made. Therefore, the ParaStation MPI communication subsystem is only used for the application traffic. The concept of splitting the two different types of communication is shown in Figure 2.1.

Often both types of communication share the same physical network. Especially for Ethernet based clusters, this is a common architecture. Albeit this may cause performance problems for some parallel applications.

As depicted in Figure 2.1 it is possible to have an

optional frontend machine not connected by the application network to the

cluster, all the same being fully integrated into the ParaStation MPI

management system. Thus, it is possible to start parallel

applications on this machine, making it unnecessary for the user

to login or even being able to login to every cluster node.

Furthermore, the frontend machine might act as an

home-directory and compile server for the

users.

The ParaStation MPI high speed communication subsystem supports different communication paths and interconnect technologies. Depending on the physically available network(s) and the actual configuration, these interconnects are automatically selected by the communication library.

ParaStation MPI currently supports a variety of communication paths to transfer application data. Not all paths are always available, depending on the physical network(s) and system architecture (uni-processor or SMP) installed and the actual configuration.

The following list shows all currently available interconnects and protocols, supported by ParaStation MPI. The list also defines the order used to select a transport by the process management.

- Shared Memory

If two or more processes of a parallel task run on the same physical node, shared memory will be used for communication between these processes. This typically happens on SMP nodes, where one process per CPU is spawned.

- InfiniBand using verbs

If InfiniBand is available on this cluster, ParaStation MPI may make use of a verbs driver.

- 10G Ethernet or InfiniBand using DAPL

Especially for 10G Ethernet, this interface should be prefered against p4sock or TCP, if available. The DAPL interface may also be used for InfiniBand.

- Myrinet using GM

This interconnect is only available, if Myrinet and GM is installed on this cluster.

- Gigabit Ethernet using ParaStation MPI protocol p4sock

This is the most effective and therefore preferred protocol for Gigabit Ethernet. For 10G Ethernet use p4sock only, if DAPL is not available. It is based on the ParaStation specific protocol

p4sock.- Ethernet using TCP

This is the default communication path. In fact, all connections providing TCP/IP can be used, independent of the underlying network.

Independent of the underlying transport networks and protocols in use, ParaStation MPI uses reliable communication.

While spawning processes on a cluster, ParaStation MPI will decide which interconnects can be used for communication. Using environment variables, the usage of particular interconnects and/or protocols may be controlled by the user. See ps_environment(7) for details.

ParaStation MPI provides several communication interfaces, suitable for different levels of functionality and environments.

- PSPort

The PSPort interface is the native low-level communication interface provided by ParaStation MPI. Any communication can be done using this interface, although programs using it will not be portable. Thus it is recommended to use a standard interface as MPI discussed below.

The main features of the PSPort interface are:

Fragmentation/Defragmentation of large messages.

Buffering of asynchronously received messages.

Provision of ports.

Selective receive.

Thread save.

Control Data possible in every message.

The PSPort interface is encapsulated in the library

libpsport.a.- TCP bypass

This Linux kernel extension redirects network traffic within a cluster from the TCP layer to the ParaStation MPI p4sock protocol layer. Due to the very small overhead of this protocol, this bypass functionality increases performance and lowers latencies seen by the application.

The application does not recognize this redirection of network packets and needn't be modified in any way. The user may control the usage of the bypass by setting the LD_PRELOAD environment variable.

- MPI

This is an implementation of the MPI Message Passing Interface. It is assumed as the standard interface in order to write parallel applications.

Its key features are:

Based on MPIch2.

Synchronous and asynchronous communication.

Zero copy communication.

Implements the whole standard of MPI-2.

Support for MPI-IO to a PVFS parallel filesystem.

- RMI

This interface enables even Java to profit from the high-performance communication provided by ParaStation MPI.

This interface is mainly a research project and thus not included within the standard distribution. The corresponding parts of ParaStation MPI may be obtained by request from ParTec. Please contact

<support@par-tec.com>.The main features of the RMI interface are:

Enable Remote Method Invocation over all supported interconnects.

Serialization of objects is provided.